General

Integrating AWS Bedrock and MongoDB (Part 2) - Agentic Interaction

12 Dec 2024

Introduction

In these articles we aim to demonstrate two different ways for integrating Bedrock with MongoDB Atlas, the cloud hosted version of MongoDB.

Our aim is to demonstrate how AI solutions such as chatbots, agents or other automation processes can be built against live transactional OLTP data stored in a modern database.

In the previous article, we demonstrated a fully managed knowledge base search tool in Bedrock and used RAG to access both structured and unstructured data.

In this article we will demonstrate how we can give an autonomous Bedrock Agent access to a MongoDB database as a tool. The agent will then autonomously choose to use that tool.

What Are Agents?

Agents are gaining a lot of attention as a new deployment model for AI and LLMs. They are what we get when we combine a language model with one or more tools. These tools will be a form of computational resource, and by combining an LLM with these additional resources we are empowering the language model to solve specific problems.

Agents can be given a goal to pursue such as in our case “You are a HR representative. Send a personalised email to each of our employees with recommendations for how they can upskill” and are then given leverage to reason through the problem. In this example the tools could effectively be API calls such as the ability to query MongoDB, send messages to an SQS queue and a Google Search function.

Solution Summary

Within this article we aim to demonstrate how we can streamline the role of an HR representative whose task might be to provide all employees with information about how to upskill within their current role. The requirement will be to send this out via email every six months. The process flow can be defined as the following six steps.

- Retrieve Employee Information: Lookup each employee's details (job title, department, and location) from a MongoDB collection

- Determine Skillset: Infer their professional needs based on their job role and location

- Search for Courses: Use online tools (e.g. APIs or LLM knowledge) to find three suitable qualifications/courses to recommend

- Personalise Recommendations: Prioritise courses based on proximity to their location and relevance to their role

- Generate Personalised Emails: Format the recommendations into an email template

- Send Emails to SQS Queue: Queue the personalised messages in an SQS queue ready for distribution to employees

Note that this solution doesn’t provide a means to send the emails as the focus is on the agentic aspect.

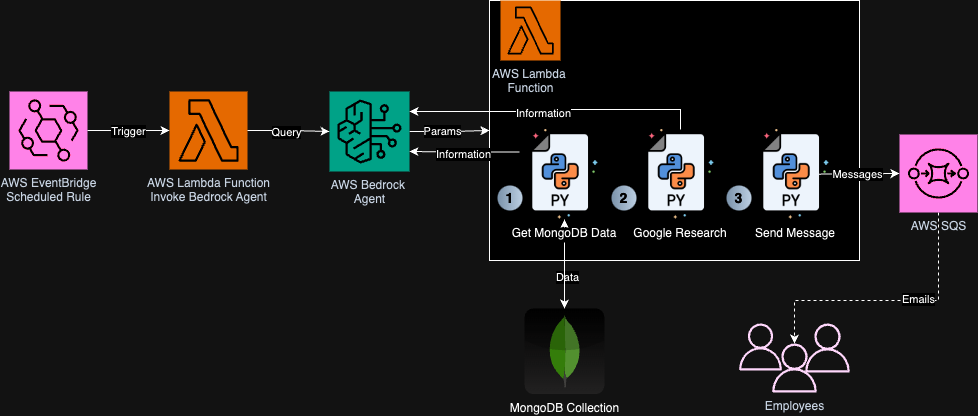

Architecture

Our architecture will involve creating an agent in AWS Bedrock. This agent will have access to several tools in the form of Python functions within an AWS Lambda Function.

Workflow

- EventBridge triggers the workflow periodically

- A dedicated AWS Lambda Function invokes the Bedrock Agent which executes one or some combination of the available Lambda Function tools:

- Retrieve the MongoDB data

- Research relevant courses using Google Search

- Send email message to an SQS queue for future distribution

Walk-Through

Within this walk-through we focus on the creation of the Bedrock Agent itself, along with the tools we supply to the agent.

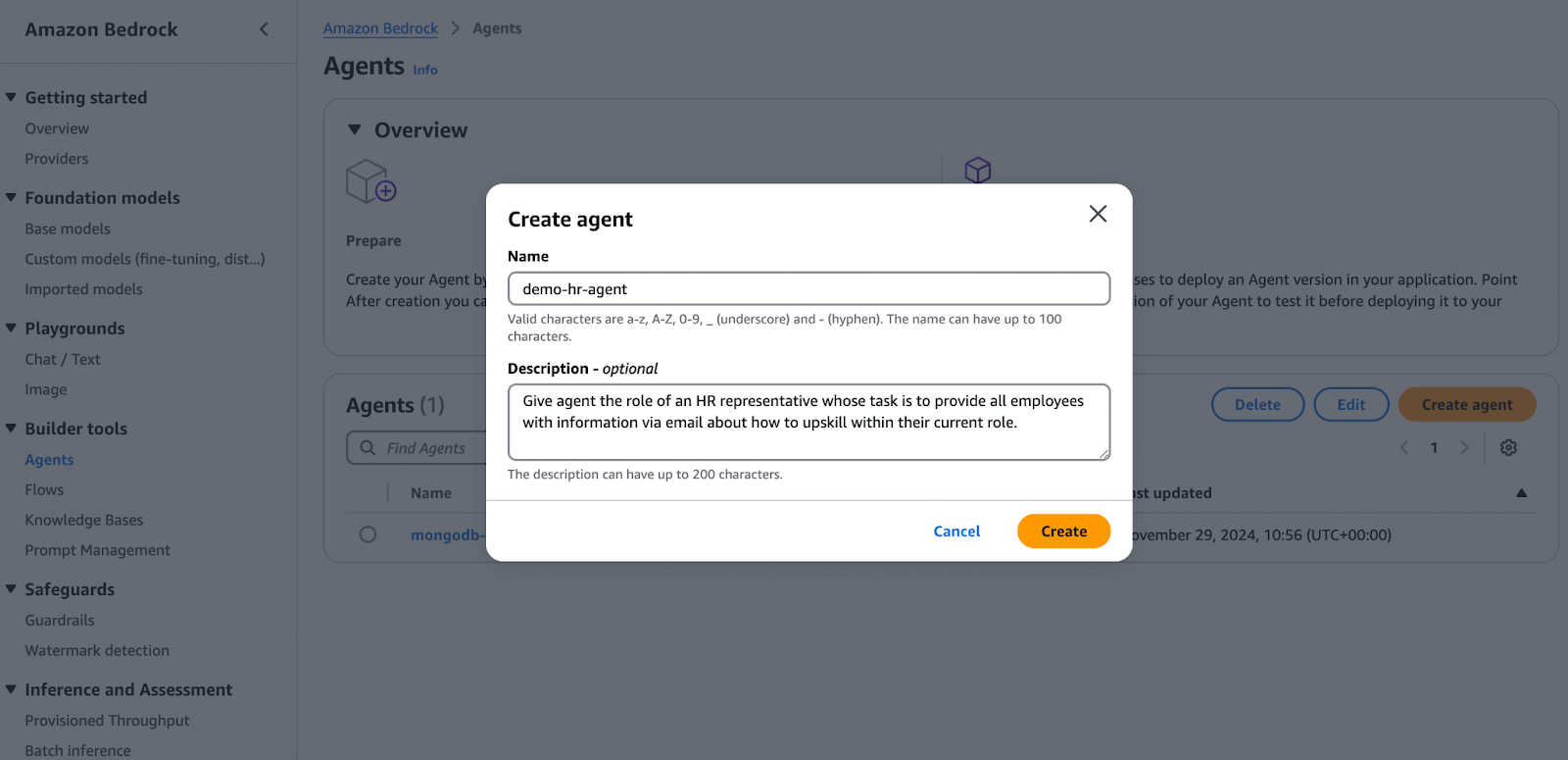

1. Defining the Agent

Step 1.

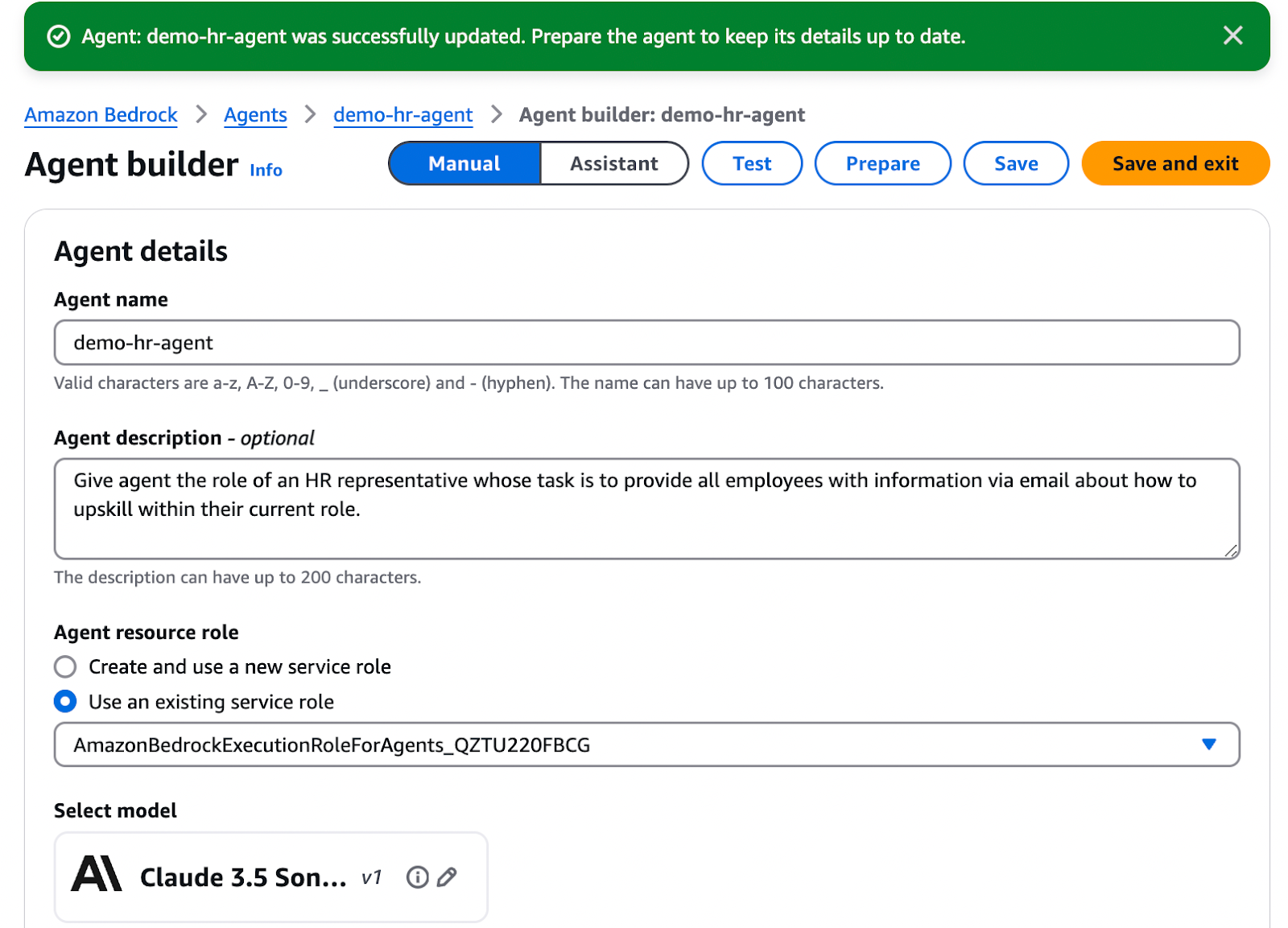

Navigate to AWS Bedrock → Agents and select ‘Create Agent’ giving your agent a name and click create.

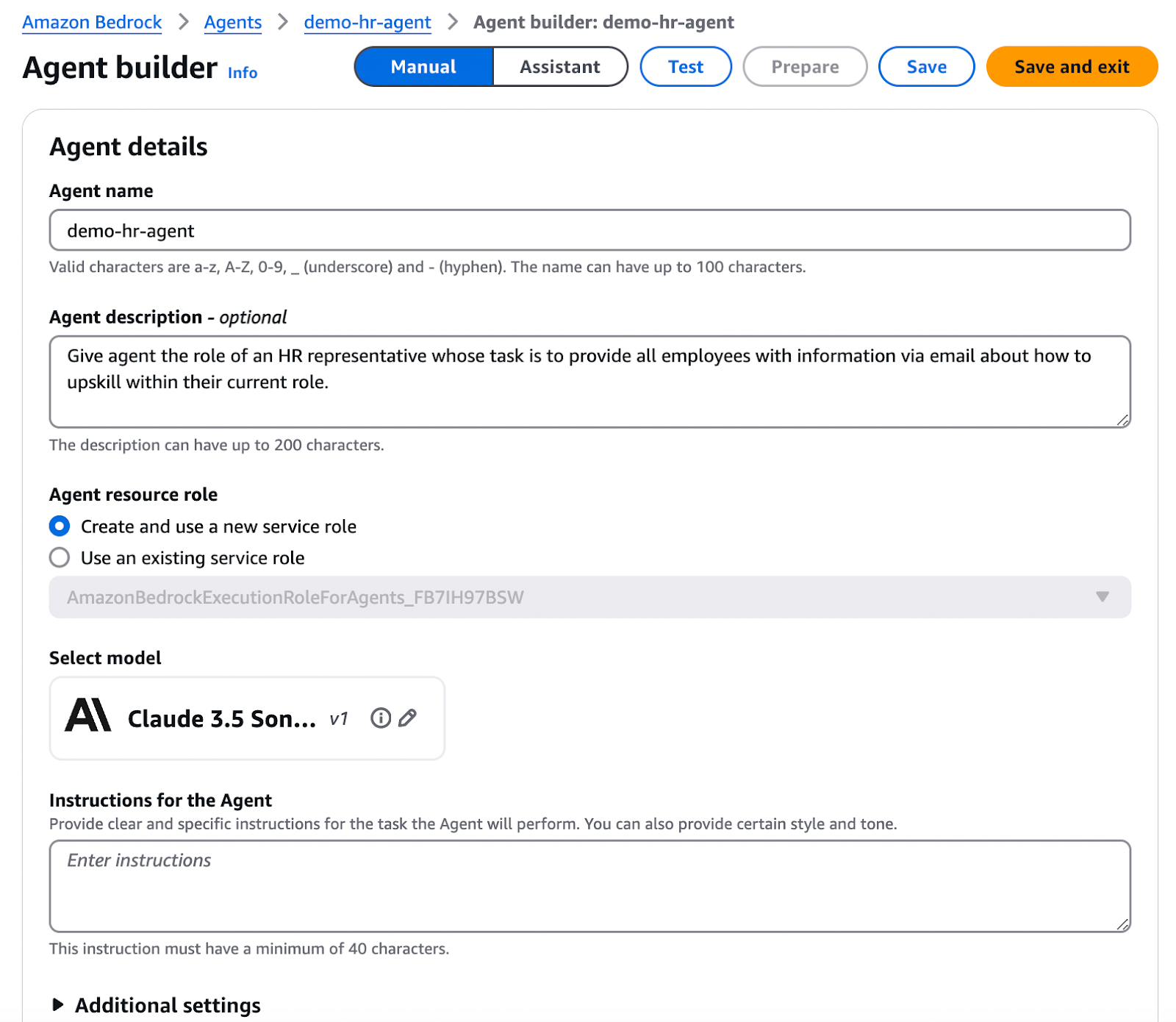

Step 2.

Assign your agent a resource role and select a base LLM. Note that you might need to navigate to Foundation models → Base models within Bedrock to enable access to particular models.

Step 3.

At the heart of the agent is the instructions. Enter instructions for the LLM so that it understands what its role is. The following are the instructions used within this demonstration.

You are representing an HR type body in a company. You should be making recommendations to all employees in order for them to enhance their skills and abilities in their professional roles. This should be in email format like the following template.

_____________

Hey \<name\>!

In order to up-skill in your profession here are some courses you might be interested in:

- Advanced Leadership Training (http://leadershipcourse.com) [Online]

- Data Analysis Workshop (http://dataanalysis.com) [London]

- Effective Communication Skills (http://commskills.com) [Manchester]

Best regards,

HR Department

_____________

Steps to carry out:

1. Fetch Employee Data

Receive details like Role, Department, Name and Location for each employee.

2. Search for Relevant Courses

Find courses related to their role/skills and their location. You can invoke this function as many times as you see fit with as many appropriate queries as required.

3. Prioritise Recommendations

Rank the courses based on the following priority: proximity, relevance to the job role and course ratings or popularity.

5. Generate a Personalised Email

Craft a message tailored to the employee. It should include their name and recommended courses with details (name, location, description) and with a clear call to action (e.g. "click here to enroll").

6. Send the Email

Send the generated email contents to the AWS SQS queue ready to be distributed to employees.

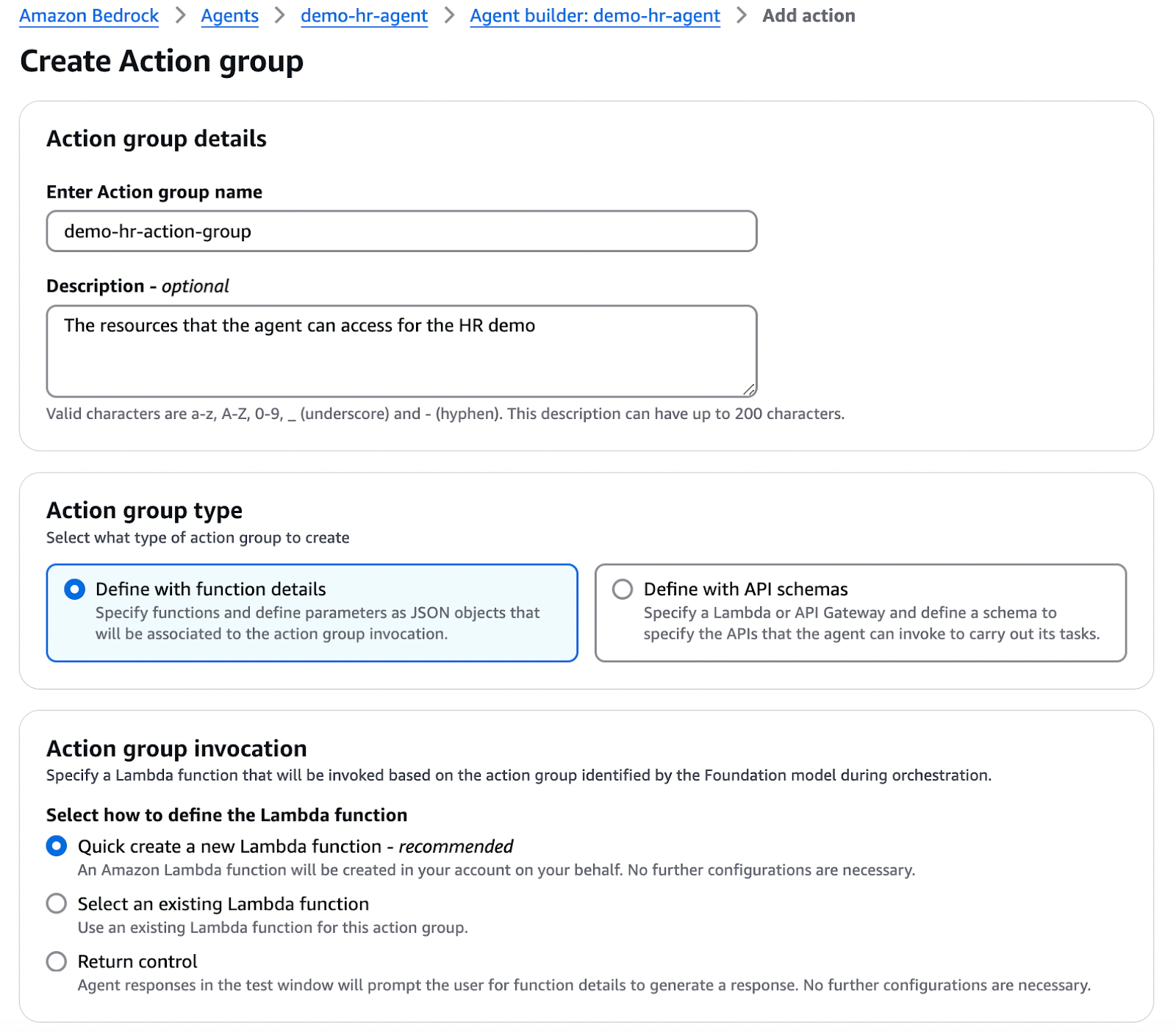

2. Defining the Action Group

Step 1.

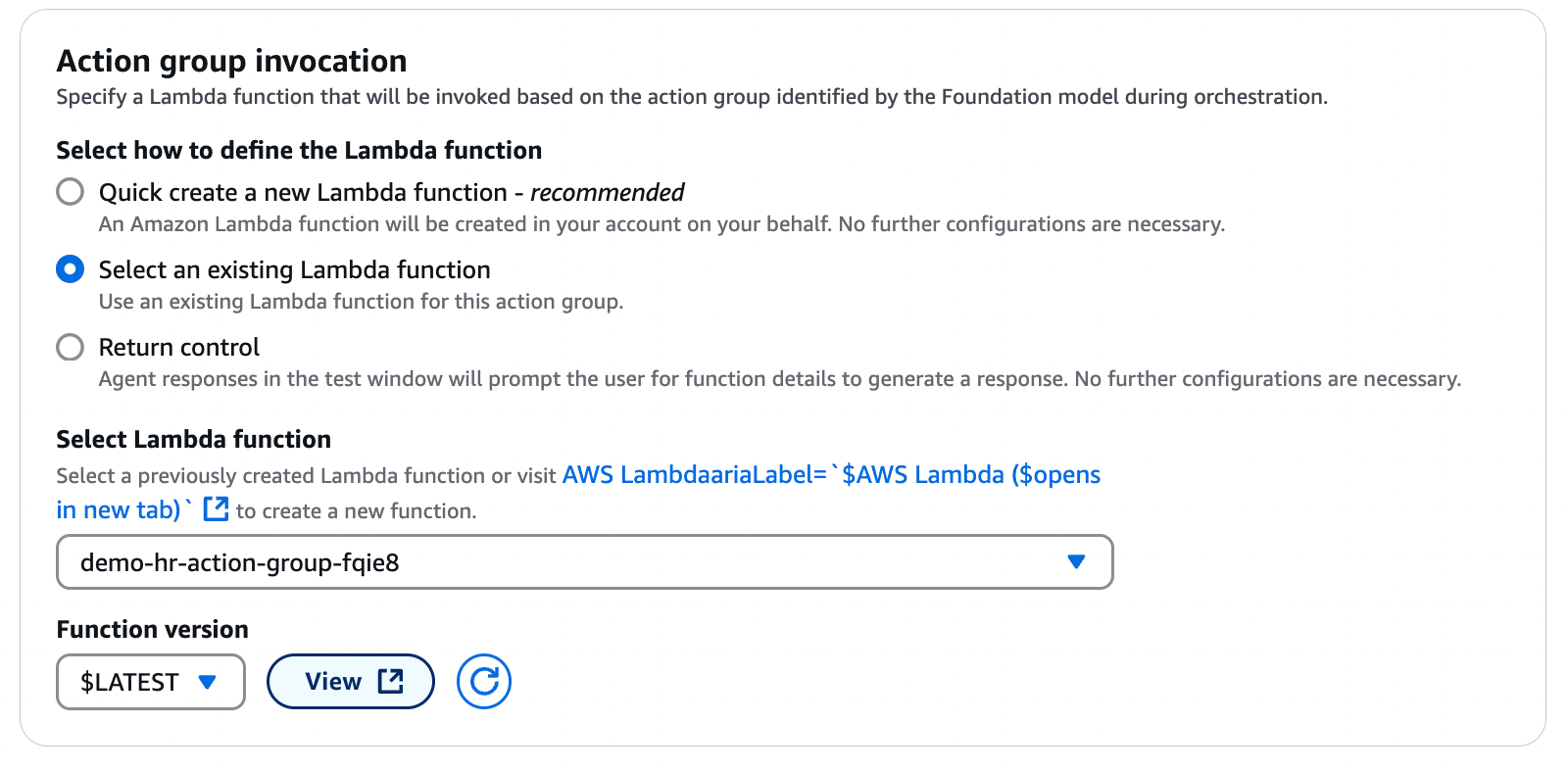

Now comes the addition of the AWS Lambda function as an action group. Give your action group a name and select to 'Quick create' a new Lambda function. It is beneficial to opt for this option over selecting an existing one as the access permissions are set up for you. Meaning that by default without creation of any AWS IAM items, the agent will have access to and be able to invoke the Lambda.

Step 2.

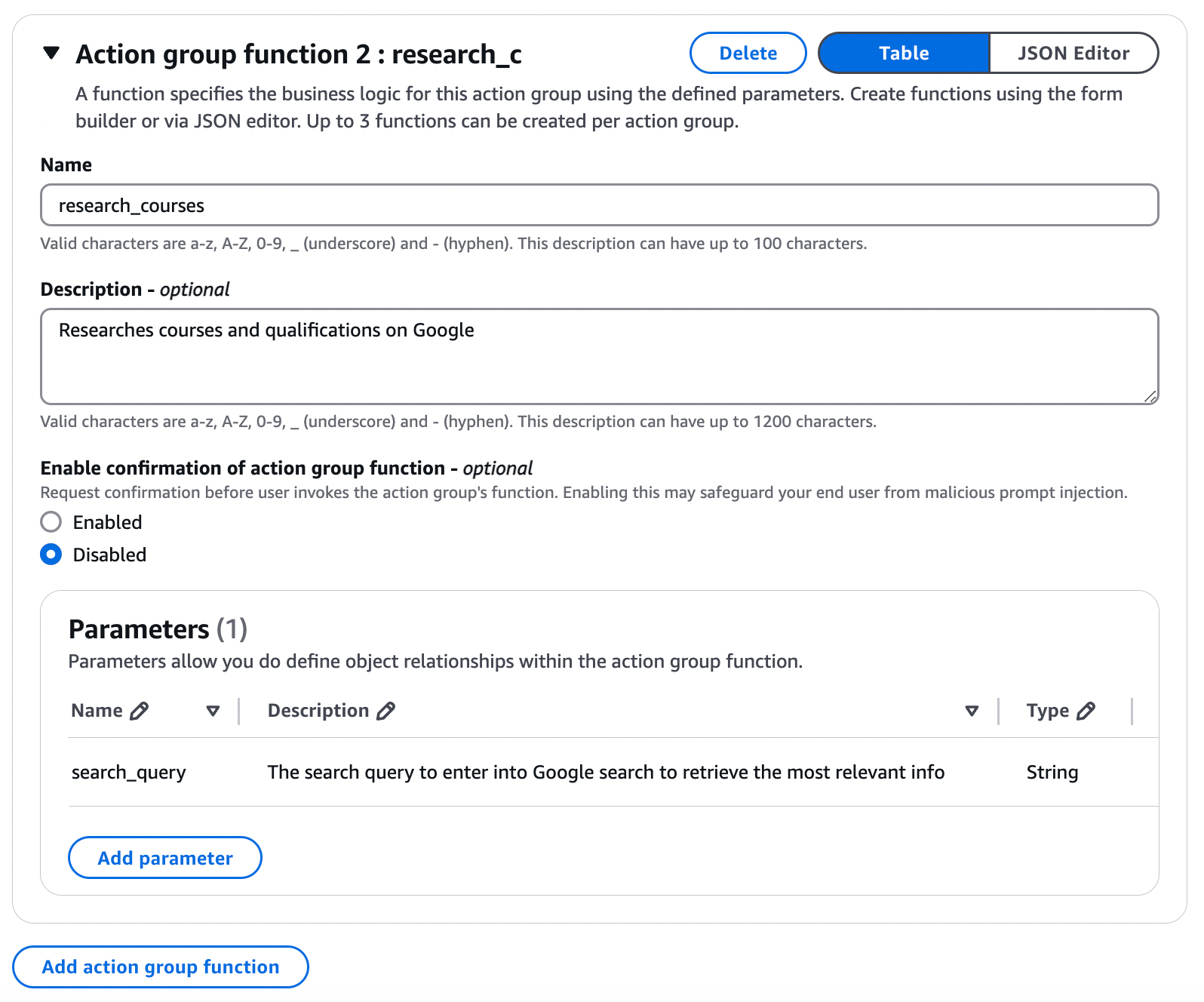

Set up your Action Group functions. Within the UI you will then be prompted to define your action group functions. The function names should correspond with the Python function names which we will see later. We should click to add as many functions as needed. See below as an example with the example input parameter. Once you have configured all functions you should hit create to create the action group.

Step 3.

Save your action group details and open up your new Lambda function for further configuration.

3. Defining the Lambda Function

You can open up your new Lambda Function via the Bedrock Agents config interface.

The Lambda function serves multiple roles defined by separate Python functions within one lambda handler function. The following outline the code for the three functions which should be wrapped in the Lambda Handler. You will see the example handler in the demonstration video.

Step 1.

Query the MongoDB data. The following snippet etrieves all data from a MongoDB collection.

def get_mongodb_data():

mongo_uri = os.getenv("mongo_uri")

db_name = "agent_demo"

collection_name = "employee_data"

# Connect to the MongoDB server

client = MongoClient(mongo_uri)

# Specify the database and collection

db = client[db_name]

collection = db[collection_name]

# Fetch all documents from the collection

data = list(collection.find())

trimmed_data = [

{

"Name": f"{i["First_name"]} {i["Last_name"]}",

"Role": i["Title"],

"Location": i["Place"],

"Department": i["Department"]

}

for i in data

]

return str(trimmed_data)

Step 2.

Research relevant courses. This function uses the retrieved data to perform external lookups for relevant courses. These are capped at top five results so that the response is small enough to be returned to the agent.

def research_courses(search_query):

search_url = "https://www.googleapis.com/customsearch/v1"

params = {

"key": os.getenv("google_api_key"),

"cx": os.getenv("google_api_cx"),

"q": search_query

}

# Make the API request

response = requests.get(search_url, params=params)

response.raise_for_status() # Raise an error for HTTP codes 4xx/5xx

# Parse the search results

search_results = response.json()

items = search_results.get("items", [])

# Extract the top 5 results for the agent to handle

top_courses = []

for item in items[:5]:

course = {

"title": item.get("title"),

"link": item.get("link"),

"snippet": item.get("snippet")

}

top_courses.append(course)

# Return search results

return json.dumps(top_courses, indent=2)

Step 3.

Send emails to SQS Queue. Collects and queues the emails ready for future distribution to employees.

def send_mail_to_sqs(email):

# Initialize SQS client

sqs = boto3.client(“sqs”)

# Get the SQS queue URL from environment variables

queue_url = os.getenv("sqs_queue_url")

response = sqs.send_message(

QueueUrl=queue_url,

MessageBody=json.dumps(email) # Ensure message body is serialized as JSON

)

return f"Message {response.get('MessageId')} queued successfully"

Once you have defined your Lambda function you are ready to test out your agent!

Step 4.

Prepare your Agent. Provided you have successfully saved your Agent configuration you should be able to hit ‘Prepare’ to prepare your agent in order to move onto testing.

Now we should have a finished working HR agent. Let’s see it in action!

Summary

Through this two-part series, we've showcased two powerful and flexible approaches for integrating AWS Bedrock with MongoDB Atlas. These integrations highlight how MongoDB Atlas serves as an exceptional foundation for AI solutions—be it chatbots, intelligent agents, or other automation processes—by enabling seamless access to data.

Hopefully the synergy between AWS Bedrock's AI capabilities and MongoDB Atlas's modern and scalable platform has demonstrated how effortlessly AI solutions can be designed and deployed to meet dynamic business needs.